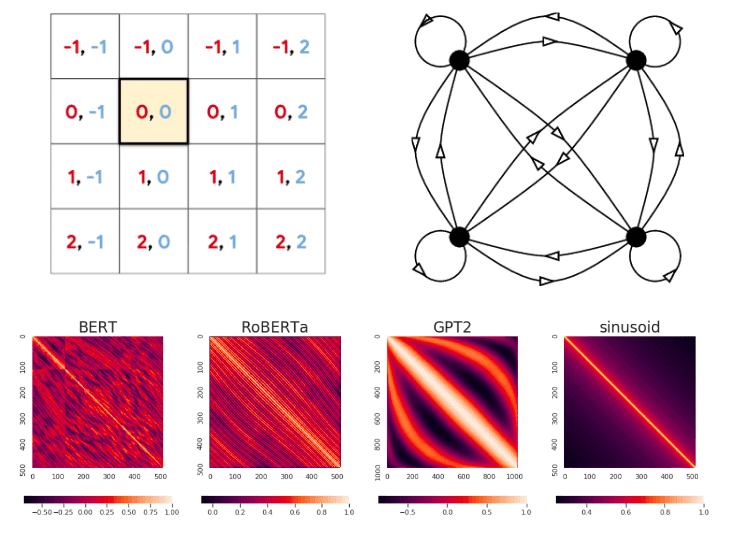

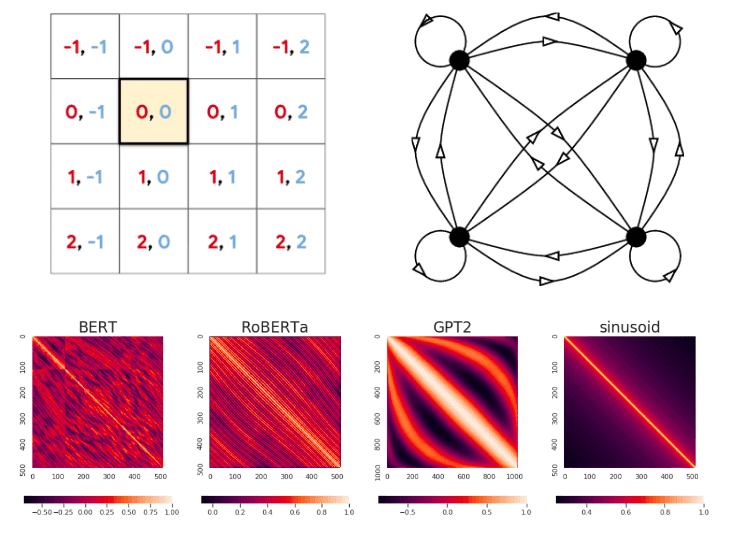

How Positional Embeddings work in Self-Attention (code in Pytorch)

Understand how positional embeddings emerged and how we use the inside self-attention to model highly structured data such as images

Apr 10, 2025 0

Apr 1, 2025 0

Mar 2, 2025 0

Feb 24, 2025 0

Feb 16, 2025 0

Mar 9, 2025 0

Apr 18, 2025 0

Apr 17, 2025 0

Apr 11, 2025 0

Apr 10, 2025 0

Mar 9, 2025 0

Apr 2, 2025 0

Apr 2, 2025 0

Apr 1, 2025 0

Mar 9, 2025 0

Or register with email

Feb 11, 2025 0

Feb 11, 2025 0

Feb 10, 2025 0

Feb 10, 2025 0

Feb 11, 2025 0

This site uses cookies. By continuing to browse the site you are agreeing to our use of cookies.