A Coding Implementation with Arcad: Integrating Gemini Developer API Tools into LangGraph Agents for Autonomous AI Workflows

Arcade transforms your LangGraph agents from static conversational interfaces into dynamic, action-driven assistants by providing a rich suite of ready-made tools, including web scraping and search, as well as specialized APIs for finance, maps, and more. In this tutorial, we will learn how to initialize ArcadeToolManager, fetch individual tools (such as Web.ScrapeUrl) or entire toolkits, […] The post A Coding Implementation with Arcad: Integrating Gemini Developer API Tools into LangGraph Agents for Autonomous AI Workflows appeared first on MarkTechPost.

Arcade transforms your LangGraph agents from static conversational interfaces into dynamic, action-driven assistants by providing a rich suite of ready-made tools, including web scraping and search, as well as specialized APIs for finance, maps, and more. In this tutorial, we will learn how to initialize ArcadeToolManager, fetch individual tools (such as Web.ScrapeUrl) or entire toolkits, and seamlessly integrate them into Google’s Gemini Developer API chat model via LangChain’s ChatGoogleGenerativeAI. With a few steps, we installed dependencies, securely loaded your API keys, retrieved and inspected your tools, configured the Gemini model, and spun up a ReAct-style agent complete with checkpointed memory. Throughout, Arcade’s intuitive Python interface kept your code concise and your focus squarely on crafting powerful, real-world workflows, no low-level HTTP calls or manual parsing required.

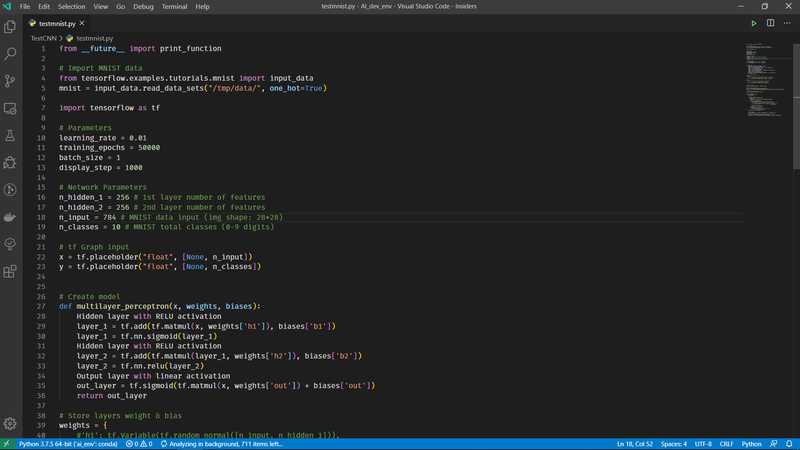

!pip install langchain langchain-arcade langchain-google-genai langgraphWe integrate all the core libraries you need, including LangChain’s core functionality, the Arcade integration for fetching and managing external tools, the Google GenAI connector for Gemini access via API key, and LangGraph’s orchestration framework, so you can get up and running in one go.

from getpass import getpass

import os

if "GOOGLE_API_KEY" not in os.environ:

os.environ["GOOGLE_API_KEY"] = getpass("Gemini API Key: ")

if "ARCADE_API_KEY" not in os.environ:

os.environ["ARCADE_API_KEY"] = getpass("Arcade API Key: ")We securely prompt you for your Gemini and Arcade API keys, without displaying them on the screen. It sets them as environment variables, only asking if they are not already defined, to keep your credentials out of your notebook code.

from langchain_arcade import ArcadeToolManager

manager = ArcadeToolManager(api_key=os.environ["ARCADE_API_KEY"])

tools = manager.get_tools(tools=["Web.ScrapeUrl"], toolkits=["Google"])

print("Loaded tools:", [t.name for t in tools])We initialize the ArcadeToolManager with your API key, then fetch both the Web.ScrapeUrl tool and the full Google toolkit. It finally prints out the names of the loaded tools, allowing you to confirm which capabilities are now available to your agent.

from langchain_google_genai import ChatGoogleGenerativeAI

from langgraph.checkpoint.memory import MemorySaver

model = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

)

bound_model = model.bind_tools(tools)

memory = MemorySaver()

We initialize the Gemini Developer API chat model (gemini-1.5-flash) with zero temperature for deterministic replies, bind in your Arcade tools so the agent can call them during its reasoning, and set up a MemorySaver to persist the agent’s state checkpoint by checkpoint.

from langgraph.prebuilt import create_react_agent

graph = create_react_agent(

model=bound_model,

tools=tools,

checkpointer=memory

)

We spin up a ReAct‐style LangGraph agent that wires together your bound Gemini model, the fetched Arcade tools, and the MemorySaver checkpointer, enabling your agent to iterate through thinking, tool invocation, and reflection with state persisted across calls.

from langgraph.errors import NodeInterrupt

config = {

"configurable": {

"thread_id": "1",

"user_id": "user@example.com"

}

}

user_input = {

"messages": [

("user", "List any new and important emails in my inbox.")

]

}

try:

for chunk in graph.stream(user_input, config, stream_mode="values"):

chunk["messages"][-1].pretty_print()

except NodeInterrupt as exc:

print(f"\n Read More

Read More

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)