AI Inference at Scale: Exploring NVIDIA Dynamo’s High-Performance Architecture

As Artificial Intelligence (AI) technology advances, the need for efficient and scalable inference solutions has grown rapidly. Soon, AI inference is expected to become more important than training as companies focus on quickly running models to make real-time predictions. This transformation emphasizes the need for a robust infrastructure to handle large amounts of data with […] The post AI Inference at Scale: Exploring NVIDIA Dynamo’s High-Performance Architecture appeared first on Unite.AI.

As Artificial Intelligence (AI) technology advances, the need for efficient and scalable inference solutions has grown rapidly. Soon, AI inference is expected to become more important than training as companies focus on quickly running models to make real-time predictions. This transformation emphasizes the need for a robust infrastructure to handle large amounts of data with minimal delays.

Inference is vital in industries like autonomous vehicles, fraud detection, and real-time medical diagnostics. However, it has unique challenges, significantly when scaling to meet the demands of tasks like video streaming, live data analysis, and customer insights. Traditional AI models struggle to handle these high-throughput tasks efficiently, often leading to high costs and delays. As businesses expand their AI capabilities, they need solutions to manage large volumes of inference requests without sacrificing performance or increasing costs.

This is where NVIDIA Dynamo comes in. Launched in March 2025, Dynamo is a new AI framework designed to tackle the challenges of AI inference at scale. It helps businesses accelerate inference workloads while maintaining strong performance and decreasing costs. Built on NVIDIA's robust GPU architecture and integrated with tools like CUDA, TensorRT, and Triton, Dynamo is changing how companies manage AI inference, making it easier and more efficient for businesses of all sizes.

The Growing Challenge of AI Inference at Scale

AI inference is the process of using a pre-trained machine learning model to make predictions from real-world data, and it is essential for many real-time AI applications. However, traditional systems often face difficulties handling the increasing demand for AI inference, especially in areas like autonomous vehicles, fraud detection, and healthcare diagnostics.

The demand for real-time AI is growing rapidly, driven by the need for fast, on-the-spot decision-making. A May 2024 Forrester report found that 67% of businesses integrate generative AI into their operations, highlighting the importance of real-time AI. Inference is at the core of many AI-driven tasks, such as enabling self-driving cars to make quick decisions, detecting fraud in financial transactions, and assisting in medical diagnoses like analyzing medical images.

Despite this demand, traditional systems struggle to handle the scale of these tasks. One of the main issues is the underutilization of GPUs. For instance, GPU utilization in many systems remains around 10% to 15%, meaning significant computational power is underutilized. As the workload for AI inference increases, additional challenges arise, such as memory limits and cache thrashing, which cause delays and reduce overall performance.

Achieving low latency is crucial for real-time AI applications, but many traditional systems struggle to keep up, especially when using cloud infrastructure. A McKinsey report reveals that 70% of AI projects fail to meet their goals due to data quality and integration issues. These challenges underscore the need for more efficient and scalable solutions; this is where NVIDIA Dynamo steps in.

Optimizing AI Inference with NVIDIA Dynamo

NVIDIA Dynamo is an open-source, modular framework that optimizes large-scale AI inference tasks in distributed multi-GPU environments. It aims to tackle common challenges in generative AI and reasoning models, such as GPU underutilization, memory bottlenecks, and inefficient request routing. Dynamo combines hardware-aware optimizations with software innovations to address these issues, offering a more efficient solution for high-demand AI applications.

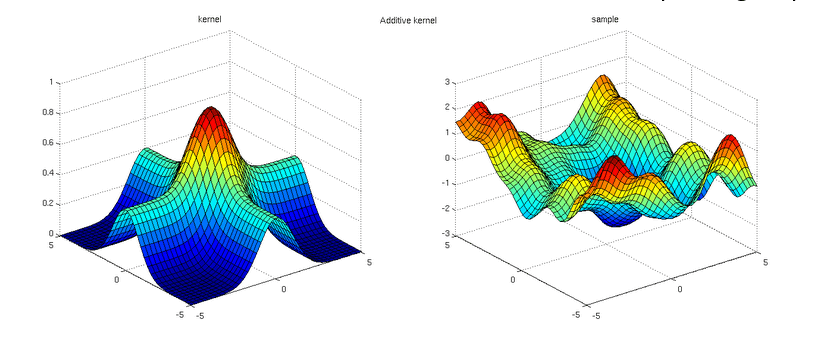

One of the key features of Dynamo is its disaggregated serving architecture. This approach separates the computationally intensive prefill phase, which handles context processing, from the decode phase, which involves token generation. By assigning each phase to distinct GPU clusters, Dynamo allows for independent optimization. The prefill phase uses high-memory GPUs for faster context ingestion, while the decode phase uses latency-optimized GPUs for efficient token streaming. This separation improves throughput, making models like Llama 70B twice as fast.

It includes a GPU resource planner that dynamically schedules GPU allocation based on real-time utilization, optimizing workloads between the prefill and decode clusters to prevent over-provisioning and idle cycles. Another key feature is the KV cache-aware smart router, which ensures incoming requests are directed to GPUs holding relevant key-value (KV) cache data, thereby minimizing redundant computations and improving efficiency. This feature is particularly beneficial for multi-step reasoning models that generate more tokens than standard large language models.

The NVIDIA Inference TranXfer Library (NIXL) is another critical component, enabling low-latency communication between GPUs and heterogeneous memory/storage tiers like HBM and NVMe. This feature supports sub-millisecond KV cache retrieval, which is crucial for time-sensitive tasks. The distributed KV cache manager also helps offload less frequently accessed cache data to system memory or SSDs, freeing up GPU memory for active computations. This approach enhances overall system performance by up to 30x, especially for large models like DeepSeek-R1 671B.

NVIDIA Dynamo integrates with NVIDIA’s full stack, including CUDA, TensorRT, and Blackwell GPUs, while supporting popular inference backends like vLLM and TensorRT-LLM. Benchmarks show up to 30 times higher tokens per GPU per second for models like DeepSeek-R1 on GB200 NVL72 systems.

As the successor to the Triton Inference Server, Dynamo is designed for AI factories requiring scalable, cost-efficient inference solutions. It benefits autonomous systems, real-time analytics, and multi-model agentic workflows. Its open-source and modular design also enables easy customization, making it adaptable for diverse AI workloads.

Real-World Applications and Industry Impact

NVIDIA Dynamo has demonstrated value across industries where real-time AI inference is critical. It enhances autonomous systems, real-time analytics, and AI factories, enabling high-throughput AI applications.

Companies like Together AI have used Dynamo to scale inference workloads, achieving up to 30x capacity boosts when running DeepSeek-R1 models on NVIDIA Blackwell GPUs. Additionally, Dynamo’s intelligent request routing and GPU scheduling improve efficiency in large-scale AI deployments.

Competitive Edge: Dynamo vs. Alternatives

NVIDIA Dynamo offers key advantages over alternatives like AWS Inferentia and Google TPUs. It is designed to handle large-scale AI workloads efficiently, optimizing GPU scheduling, memory management, and request routing to improve performance across multiple GPUs. Unlike AWS Inferentia, which is closely tied to AWS cloud infrastructure, Dynamo provides flexibility by supporting both hybrid cloud and on-premise deployments, helping businesses avoid vendor lock-in.

One of Dynamo's strengths is its open-source modular architecture, allowing companies to customize the framework based on their needs. It optimizes every step of the inference process, ensuring AI models run smoothly and efficiently while making the best use of available computational resources. With its focus on scalability and flexibility, Dynamo is suitable for enterprises looking for a cost-effective and high-performance AI inference solution.

The Bottom Line

NVIDIA Dynamo is transforming the world of AI inference by providing a scalable and efficient solution to the challenges businesses face with real-time AI applications. Its open-source and modular design allows it to optimize GPU usage, manage memory better, and route requests more effectively, making it perfect for large-scale AI tasks. By separating key processes and allowing GPUs to adjust dynamically, Dynamo boosts performance and reduces costs.

Unlike traditional systems or competitors, Dynamo supports hybrid cloud and on-premise setups, giving businesses more flexibility and reducing dependency on any provider. With its impressive performance and adaptability, NVIDIA Dynamo sets a new standard for AI inference, offering companies an advanced, cost-efficient, and scalable solution for their AI needs.

The post AI Inference at Scale: Exploring NVIDIA Dynamo’s High-Performance Architecture appeared first on Unite.AI.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)