Google AI Introduces Ironwood: A Google TPU Purpose-Built for the Age of Inference

At the 2025 Google Cloud Next event, Google introduced Ironwood, its latest generation of Tensor Processing Units (TPUs), designed specifically for large-scale AI inference workloads. This release marks a strategic shift toward optimizing infrastructure for inference, reflecting the increasing operational focus on deploying AI models rather than training them. Ironwood is the seventh generation in […] The post Google AI Introduces Ironwood: A Google TPU Purpose-Built for the Age of Inference appeared first on MarkTechPost.

At the 2025 Google Cloud Next event, Google introduced Ironwood, its latest generation of Tensor Processing Units (TPUs), designed specifically for large-scale AI inference workloads. This release marks a strategic shift toward optimizing infrastructure for inference, reflecting the increasing operational focus on deploying AI models rather than training them.

Ironwood is the seventh generation in Google’s TPU architecture and brings substantial improvements in compute performance, memory capacity, and energy efficiency. Each chip delivers a peak throughput of 4,614 teraflops (TFLOPs) and includes 192 GB of high-bandwidth memory (HBM), supporting bandwidths up to 7.4 terabits per second (Tbps). Ironwood can be deployed in configurations of 256 or 9,216 chips, with the larger cluster offering up to 42.5 exaflops of compute, making it one of the most powerful AI accelerators in the industry.

Unlike previous TPU generations that balanced training and inference workloads, Ironwood is engineered specifically for inference. This reflects a broader industry trend where inference, particularly for large language and generative models, is emerging as the dominant workload in production environments. Low-latency and high-throughput performance are critical in such scenarios, and Ironwood is designed to meet those demands efficiently.

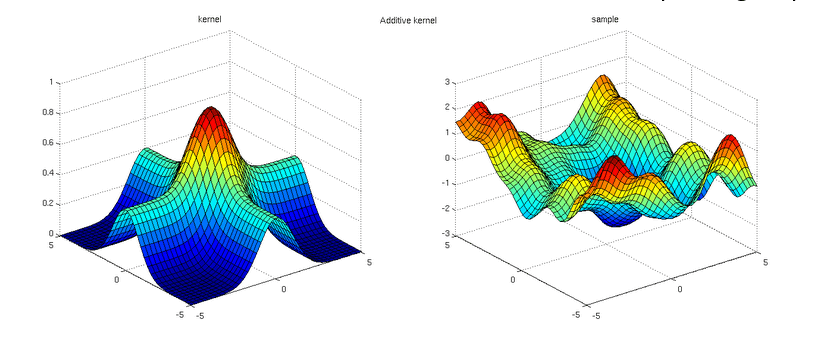

A key architectural advancement in Ironwood is the enhanced SparseCore, which accelerates sparse operations commonly found in ranking and retrieval-based workloads. This targeted optimization reduces the need for excessive data movement across the chip and improves both latency and power consumption for specific inference-heavy use cases.

Ironwood also improves energy efficiency significantly, offering more than double the performance-per-watt compared to its predecessor. As AI model deployment scales, energy usage becomes an increasingly important constraint—both economically and environmentally. The improvements in Ironwood contribute toward addressing these challenges in large-scale cloud infrastructure.

The TPU is integrated into Google’s broader AI Hypercomputer framework, a modular compute platform combining high-speed networking, custom silicon, and distributed storage. This integration simplifies the deployment of resource-intensive models, enabling developers to serve real-time AI applications without extensive configuration or tuning.

This launch also signals Google’s intent to remain competitive in the AI infrastructure space, where companies such as Amazon and Microsoft are developing their own in-house AI accelerators. While industry leaders have traditionally relied on GPUs, particularly from Nvidia, the emergence of custom silicon solutions is reshaping the AI compute landscape.

Ironwood’s release reflects the growing maturity of AI infrastructure, where efficiency, reliability, and deployment readiness are now as important as raw compute power. By focusing on inference-first design, Google aims to meet the evolving needs of enterprises running foundation models in production—whether for search, content generation, recommendation systems, or interactive applications.

In summary, Ironwood represents a targeted evolution in TPU design. It prioritizes the needs of inference-heavy workloads with enhanced compute capabilities, improved efficiency, and tighter integration with Google Cloud’s infrastructure. As AI transitions into an operational phase across industries, hardware purpose-built for inference will become increasingly central to scalable, responsive, and cost-effective AI systems.

.

Check out the Technical details. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Google AI Introduces Ironwood: A Google TPU Purpose-Built for the Age of Inference appeared first on MarkTechPost.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)