Sensor-Invariant Tactile Representation for Zero-Shot Transfer Across Vision-Based Tactile Sensors

Tactile sensing is a crucial modality for intelligent systems to perceive and interact with the physical world. The GelSight sensor and its variants have emerged as influential tactile technologies, providing detailed information about contact surfaces by transforming tactile data into visual images. However, vision-based tactile sensing lacks transferability between sensors due to design and manufacturing […] The post Sensor-Invariant Tactile Representation for Zero-Shot Transfer Across Vision-Based Tactile Sensors appeared first on MarkTechPost.

Tactile sensing is a crucial modality for intelligent systems to perceive and interact with the physical world. The GelSight sensor and its variants have emerged as influential tactile technologies, providing detailed information about contact surfaces by transforming tactile data into visual images. However, vision-based tactile sensing lacks transferability between sensors due to design and manufacturing variations, which result in significant differences in tactile signals. Minor differences in optical design or manufacturing processes can create substantial discrepancies in sensor output, causing machine learning models trained on one sensor to perform poorly when applied to others.

Computer vision models have been widely applied to vision-based tactile images due to their inherently visual nature. Researchers have adapted representation learning methods from the vision community, with contrastive learning being popular for developing tactile and visual-tactile representations for specific tasks. Auto-encoding representation approaches are also explored, with some researchers utilizing Masked Auto-Encoder (MAE) to learn tactile representations. Methods like general-purpose multimodal representations utilize multiple tactile datasets in LLM frameworks, encoding sensor types as tokens. Despite these efforts, current methods often require large datasets, treat sensor types as fixed categories, and lack the flexibility to generalize to unseen sensors.

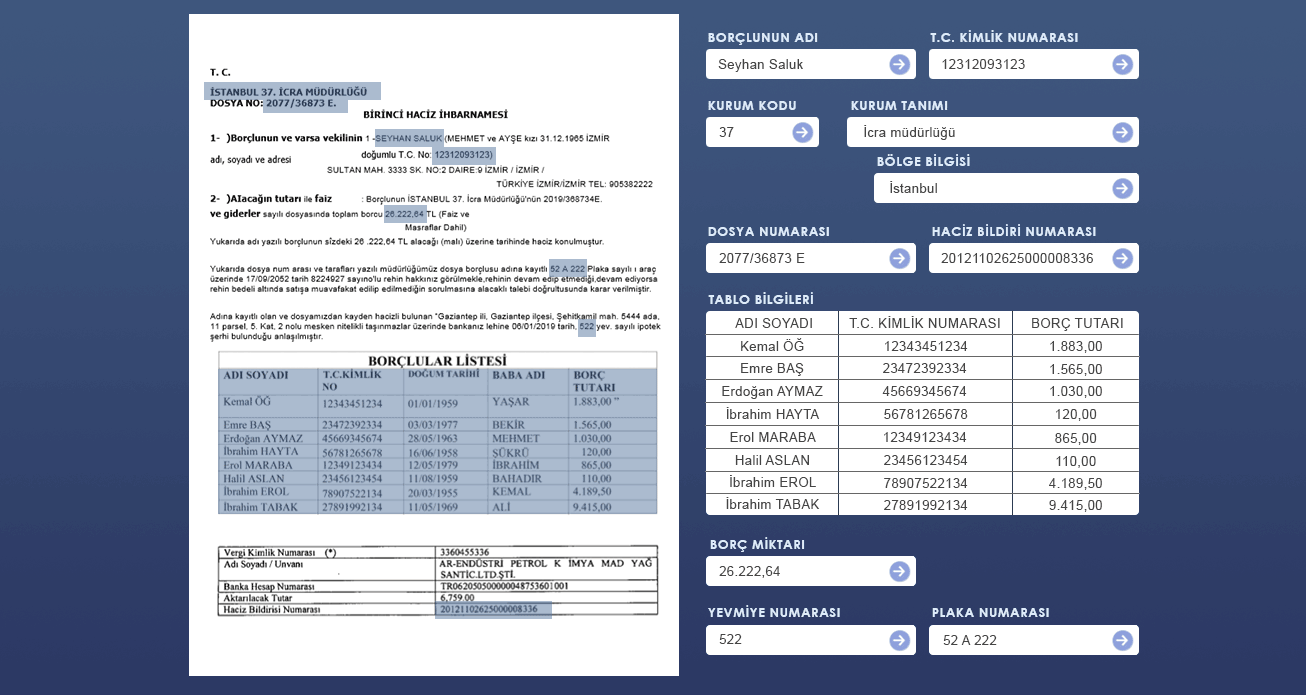

Researchers from the University of Illinois Urbana-Champaign proposed Sensor-Invariant Tactile Representations (SITR), a tactile representation to transfer across various vision-based tactile sensors in a zero-shot manner. It is based on the premise that achieving sensor transferability requires learning effective sensor-invariant representations through exposure to diverse sensor variations. It uses three core innovations: utilizing easy-to-acquire calibration images to characterize individual sensors with a transformer encoder, utilizing supervised contrastive learning to emphasize geometric aspects of tactile data across multiple sensors, and developing a large-scale synthetic dataset that contains 1M examples across 100 sensor configurations.

Researchers used the tactile image and a set of calibration images for the sensor as inputs for the network. The sensor background is subtracted from all input images to isolate the pixel-wise color changes. Following Vision Transformer (ViT), these images are linearly projected into tokens, with calibration images requiring tokenization only once per sensor. Further, two supervision signals guide the training process: a pixel-wise normal map reconstruction loss for the output patch tokens and a contrastive loss for the class token. During pre-training, a lightweight decoder reconstructs the contact surface as a normal map from the encoder’s output. Moreover, SITR employs Supervised Contrastive Learning (SCL), extending traditional contrastive approaches by utilizing label information to define similarity.

In object classification tests using the researchers’ real-world dataset, SITR outperforms all baseline models when transferred across different sensors. While most models perform well in no-transfer settings, they fail to generalize when tested on distinct sensors. It shows SITR’s ability to capture meaningful, sensor-invariant features that remain robust despite changes in the sensor domain. In pose estimation tasks, where the goal is to estimate 3-DoF position changes using initial and final tactile images, SITR reduces the Root Mean Square Error by approximately 50% compared to baselines. Unlike classification results, ImageNet pre-training only marginally improves pose estimation performance, showing that features learned from natural images may not transfer effectively to tactile domains for precise regression tasks.

In this paper, researchers introduced SITR, a tactile representation framework that transfers across various vision-based tactile sensors in a zero-shot manner. They constructed large-scale, sensor-aligned datasets using synthetic and real-world data and developed a method to train SITR to capture dense, sensor-invariant features. The SITR represents a step toward a unified approach to tactile sensing, where models can generalize seamlessly across different sensor types without retraining or fine-tuning. This breakthrough has the potential to accelerate advancements in robotic manipulation and tactile research by removing a key barrier to the adoption and implementation of these promising sensor technologies.

Check out the Paper and Code. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)