From Logic to Confusion: MIT Researchers Show How Simple Prompt Tweaks Derail LLM Reasoning

Large language models are increasingly used to solve math problems that mimic real-world reasoning tasks. These models are tested for their ability to answer factual queries and how well they can handle multi-step logical processes. Mathematical problem-solving offers a reliable way to examine whether models can extract the necessary information, navigate complex statements, and compute […] The post From Logic to Confusion: MIT Researchers Show How Simple Prompt Tweaks Derail LLM Reasoning appeared first on MarkTechPost.

Large language models are increasingly used to solve math problems that mimic real-world reasoning tasks. These models are tested for their ability to answer factual queries and how well they can handle multi-step logical processes. Mathematical problem-solving offers a reliable way to examine whether models can extract the necessary information, navigate complex statements, and compute answers correctly. This field has become central to understanding the extent of AI’s logical and cognitive capabilities.

A key concern in this domain is how these models perform when their inputs aren’t neat or formatted. In many cases, the questions LLMs encounter in practice come with extra background information, irrelevant details, or even subtle hints that could lead them off track. While models can perform well on standard benchmark problems, their ability to isolate important information from cluttered prompts remains questionable. This has raised the need to examine how distractions influence their reasoning and whether current models are ready for unpredictable, real-world use cases.

Past tools and benchmarks have focused mostly on well-formed problem sets, such as GSM8K or MATH. Still, newer variants like GSM-Symbolic and GSM-PLUS began testing model performance under symbolic variations and distractor insertions. These tools uncovered significant weaknesses in LLMs when faced with small changes to the problem text. For instance, introducing one clause that seems relevant but is logically redundant can reduce model accuracy by as much as 65%. This led to the conclusion that models often rely on surface patterns rather than genuine reasoning, which prompted further exploration into more realistic and noisy testing conditions.

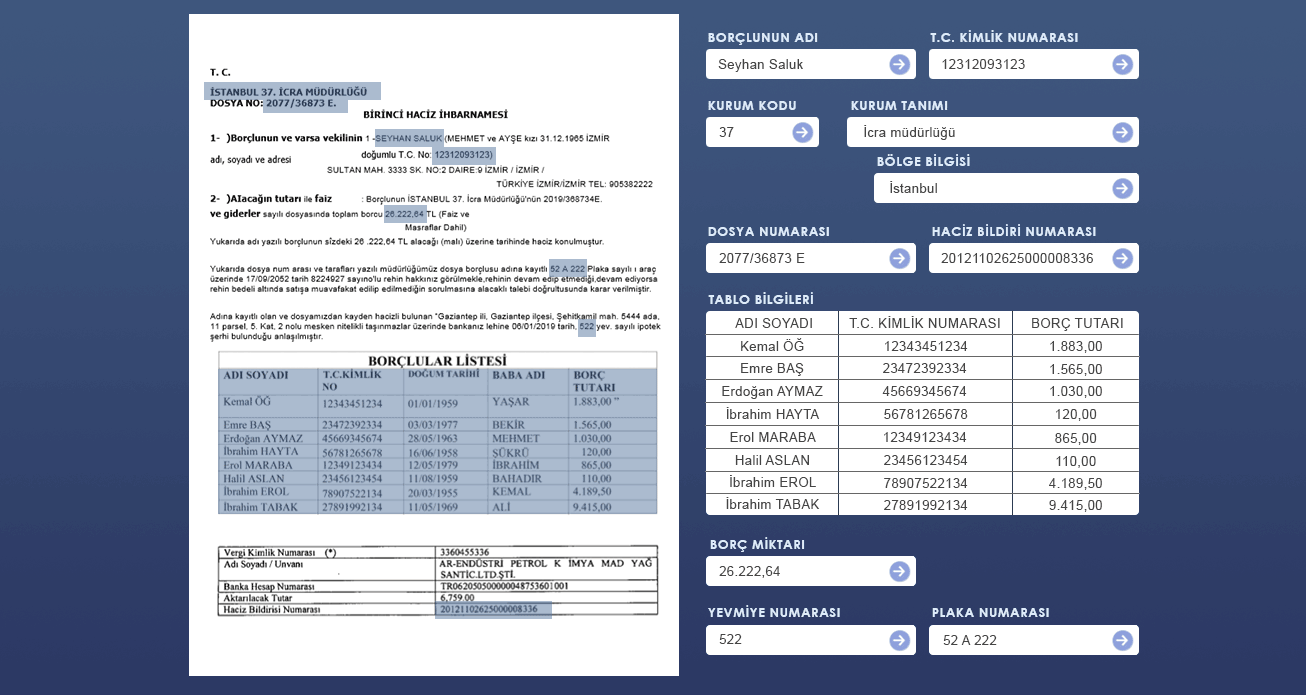

A team of researchers from the Massachusetts Institute of Technology has introduced a research focused on measuring how LLMs handle four types of systematic perturbations: irrelevant context, pathological instructions, relevant but non-essential information, and a combination of the latter two. The team evaluated 13 large language models—both open-source and commercial—through APIs provided by OpenAI, Anthropic, Cohere, and TogetherAI. Instead of relying on full test sets, the team sampled 56 data points from the GSM8K dataset per experiment, ensuring they captured a balanced distribution of reasoning complexity.

To construct these altered prompts, the researchers added dense and irrelevant contexts like Wikipedia pages or financial reports into the input. This took up to 90% of the model’s context window. In the pathological scenario, misleading instructions were appended, designed to manipulate the reasoning path without altering the original question. New details that were factually correct but unnecessary were inserted for the relevant context case to see how the models handled distractions that looked informative. In the final variant, pathological and relevant perturbations were combined, increasing the input complexity while observing how this dual pressure influenced model output.

The performance dropped most sharply when irrelevant context was introduced. Across all models, the average accuracy dropped by 55.89%. Pathological instructions caused an 8.52% decline, while relevant context led to a 7.01% decrease. Combining the two types of perturbations produced a 12.91% drop in accuracy. Interestingly, performance didn’t correlate with model size—larger models like Mixtral-8x22B and Command-R-Plus experienced greater regressions compared to some smaller models. Also, the number of reasoning steps in a problem didn’t significantly affect the outcome, suggesting that complexity in logical structure wasn’t the dominant factor in performance variance.

This study shows that current large language models, even those with billions of parameters, still struggle when their prompts are altered relatively simply. The researchers from MIT demonstrate that model resilience doesn’t improve significantly with size and that the ability to filter and prioritize information is a major gap in LLM design. These findings push for developing models that are better equipped to deal with cluttered and misleading inputs—an essential step for moving closer to reliable AI in real-world environments.

Here is the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)