Kirill Solodskih, Co-Founder and CEO of TheStage AI – Interview Series

Kirill Solodskih, PhD, is the Co-Founder and CEO of TheStage AI, as well as a seasoned AI researcher and entrepreneur with over a decade of experience in optimizing neural networks for real-world business applications. In 2024, he co-founded TheStage AI, which secured $4.5 million in funding to fully automate neural network acceleration across any hardware […] The post Kirill Solodskih, Co-Founder and CEO of TheStage AI – Interview Series appeared first on Unite.AI.

Kirill Solodskih, PhD, is the Co-Founder and CEO of TheStage AI, as well as a seasoned AI researcher and entrepreneur with over a decade of experience in optimizing neural networks for real-world business applications. In 2024, he co-founded TheStage AI, which secured $4.5 million in funding to fully automate neural network acceleration across any hardware platform.

Previously, as a Team Lead at Huawei, Kirill led the acceleration of AI camera applications for Qualcomm NPUs, contributing to the performance of the P50 and P60 smartphones and earning multiple patents for his innovations. His research has been featured at leading conferences such as CVPR and ECCV , where it received awards and industry-wide recognition. He also hosts a podcast on AI optimization and inference.

What inspired you to co-found TheStage AI, and how did you transition from academia and research to tackling inference optimization as a startup founder?

The foundations for what eventually became TheStage AI started with my work at Huawei, where I was deep into automating deployments and optimizing neural networks. These initiatives became the foundation for some of our groundbreaking innovations, and that’s where I saw the real challenge. Training a model is one thing, but getting it to run efficiently in the real world and making it accessible to users is another. Deployment is the bottleneck that holds back a lot of great ideas from coming to life. To make something as easy to use as ChatGPT, there are a lot of back-end challenges involved. From a technical perspective, neural network optimization is about minimizing parameters while keeping performance high. It’s a tough math problem with plenty of room for innovation.

Manual inference optimization has long been a bottleneck in AI. Can you explain how TheStage AI automates this process and why it’s a game-changer?

TheStage AI tackles a major bottleneck in AI: manual compression and acceleration of neural networks. Neural networks have billions of parameters, and figuring out which ones to remove for better performance is nearly impossible by hand. ANNA (Automated Neural Networks Analyzer) automates this process, identifying which layers to exclude from optimization, similar to how ZIP compression was first automated.

This changes the game by making AI adoption faster and more affordable. Instead of relying on costly manual processes, startups can optimize models automatically. The technology gives businesses a clear view of performance and cost, ensuring efficiency and scalability without guesswork.

TheStage AI claims to reduce inference costs by up to 5x — what makes your optimization technology so effective compared to traditional methods?

TheStage AI cuts output costs by up to 5x with an optimization approach that goes beyond traditional methods. Instead of applying the same algorithm to the entire neural network, ANNA breaks it down into smaller layers and decides which algorithm to apply for each part to deliver desired compression while maximizing model’s quality. By combining smart mathematical heuristics with efficient approximations, our approach is highly scalable and makes AI adoption easier for businesses of all sizes. We also integrate flexible compiler settings to optimize networks for specific hardware like iPhones or NVIDIA GPUs. This gives us more control to fine-tune performance, increasing speed without losing quality.

How does TheStage AI’s inference acceleration compare to PyTorch’s native compiler, and what advantages does it offer AI developers?

TheStage AI accelerates output far beyond the native PyTorch compiler. PyTorch uses a “just-in-time” compilation method, which compiles the model each time it runs. This leads to long startup times, sometimes taking minutes or even longer. In scalable environments, this can create inefficiencies, especially when new GPUs need to be brought online to handle increased user load, causing delays that impact the user experience.

In contrast, TheStage AI allows models to be pre-compiled, so once a model is ready, it can be deployed instantly. This leads to faster rollouts, improved service efficiency, and cost savings. Developers can deploy and scale AI models faster, without the bottlenecks of traditional compilation, making it more efficient and responsive for high-demand use cases.

Can you share more about TheStage AI’s QLIP toolkit and how it enhances model performance while maintaining quality?

QLIP, TheStage AI’s toolkit, is a Python library which provides an essential set of primitives for quickly building new optimization algorithms tailored to different hardware, like GPUs and NPUs. The toolkit includes components like quantization, pruning, specification, compilation, and serving, all critical for developing efficient, scalable AI systems.

What sets QLIP apart is its flexibility. It lets AI engineers prototype and implement new algorithms with just a few lines of code. For example, a recent AI conference paper on quantization neural networks can be converted into a working algorithm using QLIP’s primitives in minutes. This makes it easy for developers to integrate the latest research into their models without being held back by rigid frameworks.

Unlike traditional open-source frameworks that restrict you to a fixed set of algorithms, QLIP allows anyone to add new optimization techniques. This adaptability helps teams stay ahead of the rapidly evolving AI landscape, improving performance while ensuring flexibility for future innovations.

You’ve contributed to AI quantization frameworks used in Huawei’s P50 & P60 cameras. How did that experience shape your approach to AI optimization?

My experience working on AI quantization frameworks for Huawei’s P50 and P60 gave me valuable insights into how optimization can be streamlined and scaled. When I first started with PyTorch, working with the complete execution graph of neural networks was rigid, and quantization algorithms had to be implemented manually, layer by layer. At Huawei, I built a framework that automated the process. You simply input the model, and it would automatically generate the code for quantization, eliminating manual work.

This led me to realize that automation in AI optimization is about enabling speed without sacrificing quality. One of the algorithms I developed and patented became essential for Huawei, particularly when they had to transition from Kirin processors to Qualcomm due to sanctions. It allowed the team to quickly adapt neural networks to Qualcomm’s architecture without losing performance or accuracy.

By streamlining and automating the process, we cut development time from over a year to just a few months. This made a huge impact on a product used by millions and shaped my approach to optimization, focusing on speed, efficiency, and minimal quality loss. That’s the mindset I bring to ANNA today.

Your research has been featured at CVPR and ECCV — what are some of the key breakthroughs in AI efficiency that you’re most proud of?

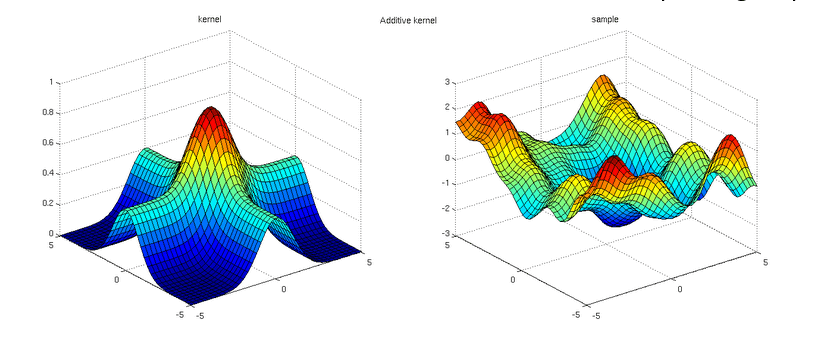

When I’m asked about my achievements in AI efficiency, I always think back to our paper that was selected for an oral presentation at CVPR 2023. Being chosen for an oral presentation at such a conference is rare, as only 12 papers are selected. This adds to the fact that Generative AI typically dominates the spotlight, and our paper took a different approach, focusing on the mathematical side, specifically the analysis and compression of neural networks.

We developed a method that helped us understand how many parameters a neural network truly needs to operate efficiently. By applying techniques from functional analysis and moving from a discrete to a continuous formulation, we were able to achieve good compression results while keeping the ability to integrate these changes back into the model. The paper also introduced several novel algorithms that hadn’t been used by the community and found further application.

This was one of my first papers in the field of AI, and importantly, it was the result of our team’s collective effort, including my co-founders. It was a significant milestone for all of us.

Can you explain how Integral Neural Networks (INNs) work and why they’re an important innovation in deep learning?

Traditional neural networks use fixed matrices, similar to Excel tables, where the size and parameters are predetermined. INNs, however, describe networks as continuous functions, offering much more flexibility. Think of it like a blanket with pins at different heights, and this represents the continuous wave.

What makes INNs exciting is their ability to dynamically “compress” or “expand” based on available resources, similar to how an analog signal is digitized into sound. You can shrink the network without sacrificing quality, and when needed, expand it back without retraining.

We tested this, and while traditional compression methods lead to significant quality loss, INNs maintain close-to-original quality even under extreme compression. The math behind it is more unconventional for the AI community, but the real value lies in its ability to deliver solid, practical results with minimal effort.

TheStage AI has worked on quantum annealing algorithms — how do you see quantum computing playing a role in AI optimization in the near future?

When it comes to quantum computing and its role in AI optimization, the key takeaway is that quantum systems offer a completely different approach to solving problems like optimization. While we didn’t invent quantum annealing algorithms from scratch, companies like D-Wave provide Python libraries to build quantum algorithms specifically for discrete optimization tasks, which are ideal for quantum computers.

The idea here is that we are not directly loading a neural network into a quantum computer. That’s not possible with current architecture. Instead, we approximate how neural networks behave under different types of degradation, making them fit into a system that a quantum chip can process.

In the future, quantum systems could scale and optimize networks with a precision that traditional systems struggle to match. The advantage of quantum systems lies in their built-in parallelism, something classical systems can only simulate using additional resources. This means quantum computing could significantly speed up the optimization process, especially as we figure out how to model larger and more complex networks effectively.

The real potential comes in using quantum computing to solve massive, intricate optimization tasks and breaking down parameters into smaller, more manageable groups. With technologies like quantum and optical computing, there are vast possibilities for optimizing AI that go far beyond what traditional computing can offer.

What is your long-term vision for TheStage AI? Where do you see inference optimization heading in the next 5-10 years?

In the long term, TheStage AI aims to become a global Model Hub where anyone can easily access an optimized neural network with the desired characteristics, whether for a smartphone or any other device. The goal is to offer a drag-and-drop experience, where users input their parameters and the system automatically generates the network. If the network doesn’t already exist, it will be created automatically using ANNA.

Our goal is to make neural networks run directly on user devices, cutting costs by 20 to 30 times. In the future, this could almost eliminate costs completely, as the user’s device would handle the computation rather than relying on cloud servers. This, combined with advancements in model compression and hardware acceleration, could make AI deployment significantly more efficient.

We also plan to integrate our technology with hardware solutions, such as sensors, chips, and robotics, for applications in fields like autonomous driving and robotics. For instance, we aim to build AI cameras capable of functioning in any environment, whether in space or under extreme conditions like darkness or dust. This would make AI usable in a wide range of applications and allow us to create custom solutions for specific hardware and use cases.

Thank you for the great interview, readers who wish to learn more should visit TheStage AI.

The post Kirill Solodskih, Co-Founder and CEO of TheStage AI – Interview Series appeared first on Unite.AI.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)