It’s Not About What AI Can Do for Us, But What We Can Do for AI

Most view artificial intelligence (AI) through a one-way lens. The technology only exists to serve humans and achieve new levels of efficiency, accuracy, and productivity. But what if we’re missing half of the equation? And what if, by doing so, we’re only amplifying the technology’s flaws? AI is in its infancy and still faces significant […] The post It’s Not About What AI Can Do for Us, But What We Can Do for AI appeared first on Unite.AI.

Most view artificial intelligence (AI) through a one-way lens. The technology only exists to serve humans and achieve new levels of efficiency, accuracy, and productivity. But what if we’re missing half of the equation? And what if, by doing so, we’re only amplifying the technology’s flaws?

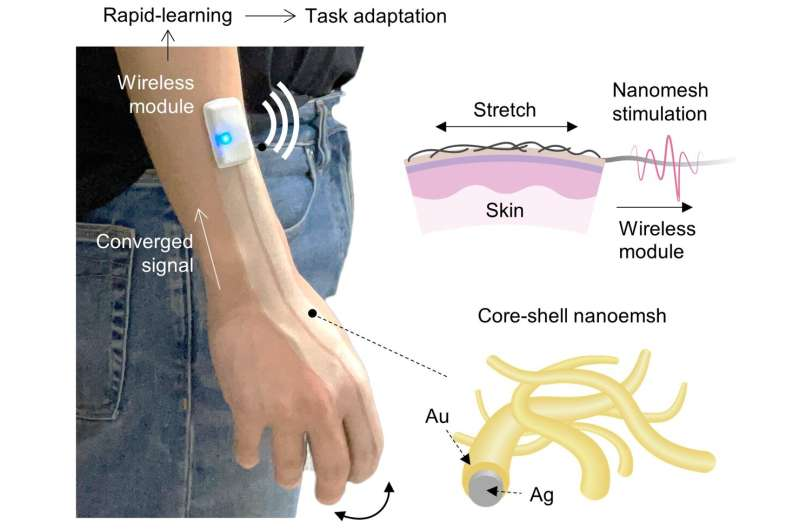

AI is in its infancy and still faces significant limitations in reasoning, data quality, and understanding concepts like trust, value, and incentives. The divide between current capabilities and true “intelligence” is substantial. The good news? We can change this by becoming active collaborators rather than passive consumers of AI.

Humans hold the key to intelligent evolution by providing better reasoning frameworks, feeding quality data, and bridging the trust gap. As a result, man and machine can work side-by-side for a win-win – with better collaboration generating better data and better outcomes.

Let’s consider what a more symbiotic relationship could look like and how, as partners, meaningful collaboration can benefit both sides of the AI equation.

The required relationship between man and machine

AI is undoubtedly great at analyzing vast datasets and automating complex tasks. However, the technology remains fundamentally limited in thinking like us. First, these models and platforms struggle with reasoning beyond their training data. Pattern recognition and statistical prediction pose no problem but the contextual judgment and logical frameworks we take for granted are more challenging to replicate. This reasoning gap means AI often falters when faced with nuanced scenarios or ethical judgment.

Second, there’s “garbage in, garbage out” data quality. Current models are trained on vast troves of information with and without consent. Unverified or biased information is used regardless of proper attribution or authorization, resulting in unverified or biased AI. The “data diet” of models is therefore questionable at best and scattershot at worst. It’s helpful to think of this impact in nutritional terms. If humans only eat junk food, we’re slow and sluggish. If agents only consume copyright and second-hand material, their performance is similarly hampered with output that’s inaccurate, unreliable, and general rather than specific. This is still far off the autonomous and proactive decision-making promised in the coming wave of agents.

Critically, AI is still blind to who and what it’s interacting with. It cannot distinguish between aligned and misaligned users, struggles to verify relationships, and fails to understand concepts like trust, value exchange, and stakeholder incentives – core elements that govern human interactions.

AI problems with human solutions

We need to think of AI platforms, tools, and agents less as servants and more as assistants that we can help train. For starters, let’s look at reasoning. We can introduce new logical frameworks, ethical guidelines, and strategic thinking that AI systems can’t develop alone. Through thoughtful prompting and careful supervision, we can complement AI’s statistical strengths with human wisdom – teaching them to recognize patterns and understand the contexts that make those patterns meaningful.

Likewise, rather than allowing AI to train on whatever information it can scrape from the internet, humans can curate higher-quality datasets that are verified, diverse, and ethically sourced.

This means developing better attribution systems where content creators are recognized and compensated for their contributions to training.

Emerging frameworks make this possible. By uniting online identities under one banner and deciding whether and what they’re comfortable sharing, users can equip models with zero-party information that respects privacy, consent, and regulations. Better yet, by tracking this information on the blockchain, users and modelmakers can see where information comes from and adequately compensate creators for providing this “new oil.” This is how we acknowledge users for their data and bring them in on the information revolution.

Finally, bridging the trust gap means arming models with human values and attitudes. This means designing mechanisms that recognize stakeholders, verify relationships, and differentiate between aligned and misaligned users. As a result, we help AI understand its operational context – who benefits from its actions, what contributes to its development, and how value flows through the systems it participates in.

For example, agents backed by blockchain infrastructure are pretty good at this. They can recognize and prioritize users with demonstrated ecosystem buy-in through reputation, social influence, or token ownership. This allows AI to align incentives by giving more weight to stakeholders with skin in the game, creating governance systems where verified supporters participate in decision-making based on their level of engagement. As a result, AI more deeply understands its ecosystem and can make decisions informed by genuine stakeholder relationships.

Don’t lose sight of the human element in AI

Plenty has been said about the rise of this technology and how it threatens to overhaul industries and wipe out jobs. However, baking in guardrails can ensure that AI augments rather than overrides the human experience. For example, the most successful AI implementations don’t replace humans but extend what we can accomplish together. When AI handles routine analysis and humans provide creative direction and ethical oversight, both sides contribute their unique strengths.

When done right, AI promises to improve the quality and efficiency of countless human processes. But when done wrong, it’s limited by questionable data sources and only mimics intelligence rather than displaying actual intelligence. It’s up to us, the human side of the equation, to make these models smarter and ensure that our values, judgment, and ethics remain at their heart.

Trust is non-negotiable for this technology to go mainstream. When users can verify where their data goes, see how it’s used, and participate in the value it creates, they become willing partners rather than reluctant subjects. Similarly, when AI systems can leverage aligned stakeholders and transparent data pipelines, they become more trustworthy. In turn, they’re more likely to gain access to our most important private and professional spaces, creating a flywheel of better data access and improved outcomes.

So, heading into this next phase of AI, let’s focus on connecting man and machine with verifiable relationships, quality data sources, and precise systems. We should ask not what AI can do for us but what we can do for AI.

The post It’s Not About What AI Can Do for Us, But What We Can Do for AI appeared first on Unite.AI.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)